Server RAM

Clarification of terms

Although the term "server RAM" is not uniformly defined, there are significant differences compared with RAM for industrial applications or the consumer sector. These lie in the performance and security requirements that go hand in hand with server applications. To achieve additional security, RDIMM modules are therefore mostly used for memory modules for servers.We will take a closer look at these requirements for the server RAM in this article.

Table of contents

- Definition server RAM

- Server RAM requirements

- Performance

- Speed

- Capacity

- Latency

- Bandwidth

- Energy efficiency

- Security

- RDIMM (Registered Dual Inline Memory Module)

- ECC (Error Correcting Code)

- Performance

- Conclusion

Definition server RAM

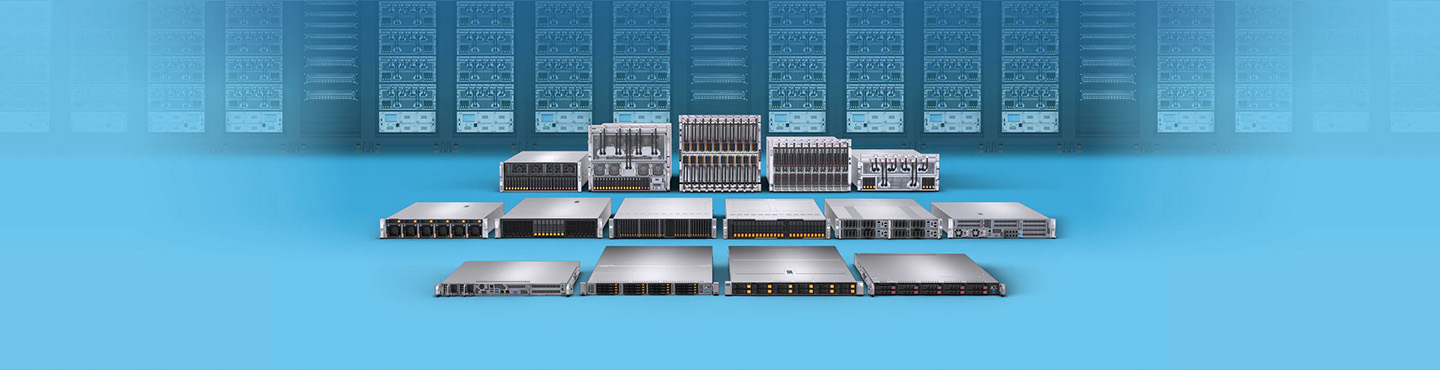

In the following, we understand server RAM as working memory for applications in the server environment. So far, this is not very surprising. The range of application areas is large and includes, among others:

- Cloud Computing

- Virtualization

- High-Performance Computing

- Database server

- Telecommunications and network infrastructure

We deliberately do not list data centers because they are not applications, but the facilities that provide the necessary hardware and network resources to enable various applications and services.

This list is by no means exhaustive, but it shows the variety of application areas in which server RAM is used and is sufficient to illustrate the extent to which performance and reliability of RAM are decisive for the stability and efficiency of systems.

Server RAM requirements

Depending on the application and area of use, the requirements for main memory for server applications differ from one another. In principle, however, it can be stated that the following factors are particularly relevant for them:

Performance

In the following, we understand performance as aspects that have a direct influence on the performance of the working memory: e.g. speed, latency or capacity. Although these play an important role for all application areas, they are particularly relevant for servers due to the large amount of data to be processed.

Speed

RAM speed is often measured in megatransfers per second (MT/s) and refers to the number of data transfers that RAM can perform per second. Higher speeds can lead to faster data processing and thus improve the overall performance of the system. For example, Samsung DDR5 modules achieve speeds of up to 7200 MT/s.

Megahertz (MHz) indicates a clock pulse: How many millions of operations (cycles) per second are performed in a periodic signal? In our case, this clock determines the data transfer between RAM and processor: data is transferred with each cycle. In the predecessor technology of the DDR SDRAM, there was a data transfer on the rising edge of the signal (and thus once per cycle). Thus MHz could also be used well as a measure of data transfer: one cycle, one data transfer. Since the introduction of DDR technology, data is transmitted on both the rising and the falling edge of the signal. At the latest since then, MT/s are often used to indicate the effective data rate more precisely.

To illustrate the impact of speed on overall performance, we outline the following scenario:

that depends on fast data access and transfer. Elsewhere, such online transaction processing systems (OLTP) are used, for example, by financial service providers, reservation systems (e.g., by airlines or hotels), or in e-commerce. What they all have in common is that fast and reliable data processing plays a crucial role.

Assume that the CRM system currently uses DDR4 modules with a speed of 3200 megatransfers per second (MT/s) and requires an average of 10 milliseconds (ms) to process a request. Upgrading the system to DDR5 modules, which can perform speeds of up to 7200 MT/s, could reduce the processing time for the same request to about 5 ms (assuming memory is the limiting factor). This would mean 50% faster processing of requests, which can lead to an improved user experience for employees and customers.

Attention. This is an example to better illustrate the performance differences in terms of speed. It is important to note that the actual performance differences depend on many factors, such as the system architecture, the specific requirements of the application and the network conditions. In particular, MHz alone cannot make a statement about the actual data transfer rate, which also depends significantly on the CPU. Nevertheless, it shows that an increased speed of RAM modules can significantly improve performance and responsiveness.

Capacity

The influence of higher RAM capacity plays a decisive role on the overall performance of the server. Basically, servers often need to run multiple applications at the same time and provide resources to multiple users or customers at the same time. Higher RAM capacity allows the server to efficiently process more applications and user requests at the same time without having to resort to slower hard disk storage, and even for individual memory-intensive applications (such as graphics and video editing programs), capacity can play a significant role in improving performance.

Samsung's DDR5 modules offer a significantly higher capacity compared to DDR4 modules. DDR4 modules are usually available in capacities of 4 GB, 8 GB, 16 GB or 32 GB, while DDR5 modules can reach capacities of 32 GB, 64 GB or even up to 128 GB.

For illustrative purposes, we outline another scenario to illustrate the impact of higher capacity on overall performance.

If the applications on the server collectively require more than 64 GB of memory to function optimally, the system may reach its limits and performance may be affected. In such cases, slower response times and delays may occur.

By increasing the total capacity, for example with a DDR5 module with 128 GB, the available working memory capacity is doubled. Data-intensive applications can thus work more efficiently and be kept in the working memory at the same time without having to switch to the slower hard drive or SSD memory. This can result in applications responding faster and improved performance overall.

It is important to note that the actual performance improvements depend on the specific system configuration and application requirements. Nevertheless, this example shows how the higher capacity of DDR5 modules compared to DDR4 modules can help improve the performance of systems and applications.

Latency

Working memory latency is the time that elapses before the memory responds to a request. Latency is usually measured in nanoseconds (ns) or as the number of memory cycles and affects the time it takes for a system to respond to requests. Lower latencies mean faster response and better performance.

The latency of DDR5 modules has improved compared to DDR4 modules in that while DDR5 modules have similar CAS (CL) latencies, the effective latency is reduced due to the higher clock frequencies of DDR5 modules.

Latency refers to the time it takes for a memory module to respond to a command and provide data. The effective latency is measured in nanoseconds (ns). It is calculated by dividing the CAS latency by the module's clock frequency and then multiplying by 2,000. Thus:

Latency = (CL / clock) * 2,000

DDR5 modules have a higher clock frequency than DDR4 modules, which means they can process more instructions per second. Although the CAS latency of DDR5 may be similar or even slightly higher than DDR4, the higher clock frequency results in a lower effective latency overall.

Bandwidth

Although speed and bandwidth are not exactly the same thing, they are closely related, as higher speed usually leads to higher bandwidth.

Bandwidth refers to the amount of data that can be transferred per second. It is often measured in gigabytes per second (GB/s). The bandwidth of a memory module depends on its speed and the width of the data bus. A higher bandwidth means that more data can be transferred simultaneously, which improves performance, especially in data-intensive applications.

While DDR4 modules can typically achieve speeds of up to 3200 MT/s (megatransfers per second), DDR5 modules offer speeds of up to 6400 MT/s or even higher (up to 8400 MT/s in some cases). This corresponds to a doubling or more of the memory bandwidth compared to DDR4 modules.

The bandwidth improvement in DDR5 modules compared to DDR4 modules brings some concrete advantages:

- Faster data transfer Higher bandwidth allows DDR5 modules to process and transfer larger amounts of data faster, improving performance, especially for data-intensive applications such as databases, video editing, or scientific simulations.

- Improved system performance: The higher bandwidth of DDR5 modules enables better multitasking and parallel processing performance, allowing multiple applications and processes to run more efficiently at the same time.

- Scalability: The higher bandwidth of DDR5 memory modules enables systems to scale better as requirements grow. This is especially important in servers, data centers, and high-performance computing where data volumes and processing requirements are constantly increasing.

- Energy efficiency: Despite the higher bandwidth, DDR5 modules are generally more energy efficient than DDR4 modules because they can be operated at a lower voltage. These energy savings can lead to a significant reduction in energy consumption and operating costs in servers and data centers, which we will discuss separately in the next section.

Energy efficiency

The energy efficiency of RAM modules for server applications has been of particular importance not only since the energy crisis. Servers often run around the clock, 365 days a year, and usually use large amounts of memory. Higher energy efficiency results in lower operating costs and reduces heat generation, which in turn reduces cooling requirements.

The energy efficiency of Samsung DDR5 modules has again improved compared to the previous generations: A lower operating voltage (DDR5: 1.1 V; DDR4: 1.2 V; DDR3: 1.5 V), improved power management and improved on-die termination lead to a reduction in energy consumption overall and thus to a reduction in CO2 emissions.

Security

Under security, we summarize in the following aspects that on the one hand favor stable operation with large amounts of memory and on the other hand improve data security.

RDIMM

RDIMMs (Registered Dual Inline Memory Modules) (unlike UDIMMs & SODIMMs) have a register chip that serves as a buffer between the memory controller and the memory chips. This register chip reduces the load on the memory controller by filtering and stabilizing the electrical signals before they reach the memory chips. This stabilization allows more memory modules to be used in a system while increasing memory reliability and performance.

Overall, buffered memories such as RDIMMs provide more stable and reliable memory communication in systems with high memory capacities or complex signal paths, while unbuffered memories such as UDIMMs and SODIMMs generally provide slightly faster signal processing but may be more susceptible to signal interference.

ECC (Samsung ODECC)

ECC stands for "Error Correcting Code". It is a technology that is used in certain RAM modules, such as ECC RDIMM (Registered Dual In-line Memory Module). In simple terms, ECC enables the automatic detection and correction of errors that can occur during data transfer in RAM. These errors can be caused, for example, by electromagnetic interference or other disturbances. If such errors are not corrected, they can in some cases lead to system crashes or data loss.

For the average home user, ECC-RAM is usually not required because the probability of errors leading to serious problems is relatively low. Servers in data centers or critical systems, on the other hand, require high reliability and stability, since in some applications a small error can have a major impact and even lead to costly failures.

The use of ECC RAM minimizes the risk of such problems by providing an additional layer of security.

ODECC stands for "On-Die Error Correcting Code" and is a technology developed by Samsung for error correction in memory modules. Unlike conventional ECC technology, where error correction is performed by a separate chip on the memory module, with ODECC the error correction takes place directly on the memory chip (also referred to as "die").

More information about Samsung ODECC:

- Samsung ODECC is integrated directly into the memory chip, which means error correction takes place at a lower level. Furthermore, less latency and better performance becomes possible.

- Efficiency: Since ODECC works directly on the die, it can react faster and more efficiently to errors and correct them. This can improve the overall performance of the memory module.

- Space saving: Because error correction takes place directly on the die, there is no need for additional chips on the module, resulting in space savings on the PCB. This can contribute to more compact modules or higher memory density on the module.

- Reliability: By integrating ODECC into the memory chip itself, errors can be detected and corrected more quickly. This can lead to higher reliability and longevity of the memory module.

Overall, ODECC is a further development of conventional ECC technology. It aims to integrate error correction directly on the memory chip. This can improve performance, efficiency and reliability while saving space on the circuit board.

Conclusion

The main differences between server RAM and consumer and industrial RAM are the performance and security requirements.

- Performance is achieved, for example, through higher speed and bandwidth as well as lower latencies.

- Security is primarily achieved by using RDIMM memory modules and Error Correcting Code (ECC) technology - usually in combination with each other.

In this way, application fields such as cloud computing, virtualization or even high-performance computing are provided with the RAMs that meet their requirements.

Are there any questions left unanswered? Then feel free to contact our experts at any time. They will be happy to help you find the best RAM for your server's requirements.

-

High-Performance Computing Infrastructure to Support Molecular ResearchMaximilian Jaud | 10 February 2025The system configuration enables highly complex computations to run in parallel while efficiently processing large datasets.Mehr lesen

High-Performance Computing Infrastructure to Support Molecular ResearchMaximilian Jaud | 10 February 2025The system configuration enables highly complex computations to run in parallel while efficiently processing large datasets.Mehr lesen -

High-Performance Virtualization Platform based on ProxmoxMaximilian Jaud | 31 January 2025Redundant high-performance platform capable of virtualizing around 200 servers for diverse applications, featuring fast NVMe storage for active workloads and large HDD storage for long-term archiving.Mehr lesen

High-Performance Virtualization Platform based on ProxmoxMaximilian Jaud | 31 January 2025Redundant high-performance platform capable of virtualizing around 200 servers for diverse applications, featuring fast NVMe storage for active workloads and large HDD storage for long-term archiving.Mehr lesen -

High-Performance and Fail-Safe System for CybersecurityMaximilian Jaud | 16 December 2024To support complex security processes, the customer required a high-performance, scalable IT infrastructure.Mehr lesen

High-Performance and Fail-Safe System for CybersecurityMaximilian Jaud | 16 December 2024To support complex security processes, the customer required a high-performance, scalable IT infrastructure.Mehr lesen -

High-Performance IT Infrastructure to Support ResearchMaximilian Jaud | 29 November 2024High-performance and specialised HPC infrastructure for Forschungszentrum Jülich.Mehr lesen

High-Performance IT Infrastructure to Support ResearchMaximilian Jaud | 29 November 2024High-performance and specialised HPC infrastructure for Forschungszentrum Jülich.Mehr lesen -

Hardware Requirements in the Age of Edge and Cloud ComputingMaximilian Jaud | 28 October 2024Deductions from the McKinsey Technology Trends Outlook 2024 – Part 2Mehr lesen

Hardware Requirements in the Age of Edge and Cloud ComputingMaximilian Jaud | 28 October 2024Deductions from the McKinsey Technology Trends Outlook 2024 – Part 2Mehr lesen -

Effects of Generative AI on Hardware RequirementsMaximilian Jaud | 28 October 2024Deductions from the McKinsey Technology Trends Outlook 2024 – Part 1Mehr lesen

Effects of Generative AI on Hardware RequirementsMaximilian Jaud | 28 October 2024Deductions from the McKinsey Technology Trends Outlook 2024 – Part 1Mehr lesen -

Focus on technologiesMaximilian Jaud | 1 August 2024the relevance of HBM and 3D stackingMehr lesen

Focus on technologiesMaximilian Jaud | 1 August 2024the relevance of HBM and 3D stackingMehr lesen -

Supermicro X14 - Simply explainedMaximilian Jaud | 15 July 2024Supermicro introduces the new X14 server seriesMehr lesen

Supermicro X14 - Simply explainedMaximilian Jaud | 15 July 2024Supermicro introduces the new X14 server seriesMehr lesen -

A Look at Samsung's PM1743 SSDMaximilian Jaud | 15 July 2024Security and Reliability Solutions for Enterprise EnvironmentsMehr lesen

A Look at Samsung's PM1743 SSDMaximilian Jaud | 15 July 2024Security and Reliability Solutions for Enterprise EnvironmentsMehr lesen -

SIE and SED Encryption in KIOXIA SSDsMaximilian Jaud | 15 July 2024KIOXIA has enabled these functions by default, providing all users with enhanced data security.Mehr lesen

SIE and SED Encryption in KIOXIA SSDsMaximilian Jaud | 15 July 2024KIOXIA has enabled these functions by default, providing all users with enhanced data security.Mehr lesen -

PCRAMMaximilian Jaud | 1 December 2023Learn more about the potential of FeRAM as a powerful replacement for EEPROM solutionsMehr lesen

PCRAMMaximilian Jaud | 1 December 2023Learn more about the potential of FeRAM as a powerful replacement for EEPROM solutionsMehr lesen -

ReRAMMaximilian Jaud | 1 December 2023Learn more about the potential of FeRAM as a powerful replacement for EEPROM solutionsMehr lesen

ReRAMMaximilian Jaud | 1 December 2023Learn more about the potential of FeRAM as a powerful replacement for EEPROM solutionsMehr lesen