Supermicro H13: Unleashing AI and ML Potential

In this and some following articles, we will take a closer look at the new H13 product line from Supermicro. It delivers a particularly powerful and efficient solution for many application areas. It achieves this by using the latest technologies, such as.

- the AMD EPYC™ processors of the 4th generation,

- the AMD 3D V-Cache™,

- of support

- for DDR5-4800MHz memory and

- of the PCIe 5.0 standard.

In this paper, we focus on the use of the H13 product line in the context of artificial intelligence (AI) and machine learning ( ML ).

What you can expect:

Table of contents

- Background: artificial intelligence and machine learning - what is it?

- Why AI and ML need so much computing power

- The most important facts about the Supermicro H13 product line for AMD EPYC™ 9004 series server processors.

- Why H13 systems are particularly well suited for AI and ML

- Which products in the line are particularly suitable for AI and ML

- Application scenario: a soccer club uses ML

- Conclusion: increased computing power and exceptional energy efficiency

Background: artificial intelligence and machine learning - what is it?

AI (Artificial Intelligence) and ML (Machine Learning) are two overlapping fields that cover a wide range of technologies and applications. They are closely related to the development and application of algorithms that enable computers to perform tasks that normally require human thought, such as recognizing patterns, understanding language, making decisions, and learning from experience.

Artificial Intelligence

Artificial intelligence (AI) or AI generally refers to the ability of machines to exhibit intelligent behaviors similar to those of humans. This can range from simple rules and decision tree structures to complex processes based on machine learning, deep neural network analysis, and other advanced algorithms.

Machine Learning

Machine learning (ML), a subfield of AI, focuses on developing algorithms and statistical models that allow computers to perform tasks without being explicitly programmed. With ML, we train models with data and have them make predictions or decisions based on patterns and insights they gain from that data. With new data or advanced analysis, these algorithms learn and improve on their own over time.

Why AI and ML need so much computing power

Computational power plays a critical role in AI and ML. The process of training ML models, especially deep neural networks, is extremely computationally intensive. It requires performing many complex computations, often on or with huge datasets, and can take hours, days, or even weeks, depending on the complexity of the model, the size of the training dataset, and last but not least, the available hardware.

With higher computing power, AI and ML models can be trained faster and more accurately. State-of-the-art hardware such as GPUs, TPUs, and specialized AI accelerators provide the necessary computing power to train these models. In addition to pure computing power, the architecture of the hardware also plays a role, especially support for parallel processing and specific mathematical operations commonly used in AI and ML operations.

Of course, AI and ML require not only powerful hardware in the process, but also a variety of software tools, libraries, and frameworks (such as TensorFlow, PyTorch, Keras, and many others). In addition, they are supported by technologies for parallel computation. These include, for example, OpenCL and the now world-leading NVIDIA architecture CUDA. These technologies enable developers to efficiently develop algorithms and train models on CPUs and embedded GPUs. APIs such as DirectX can also be relevant for specific tasks .

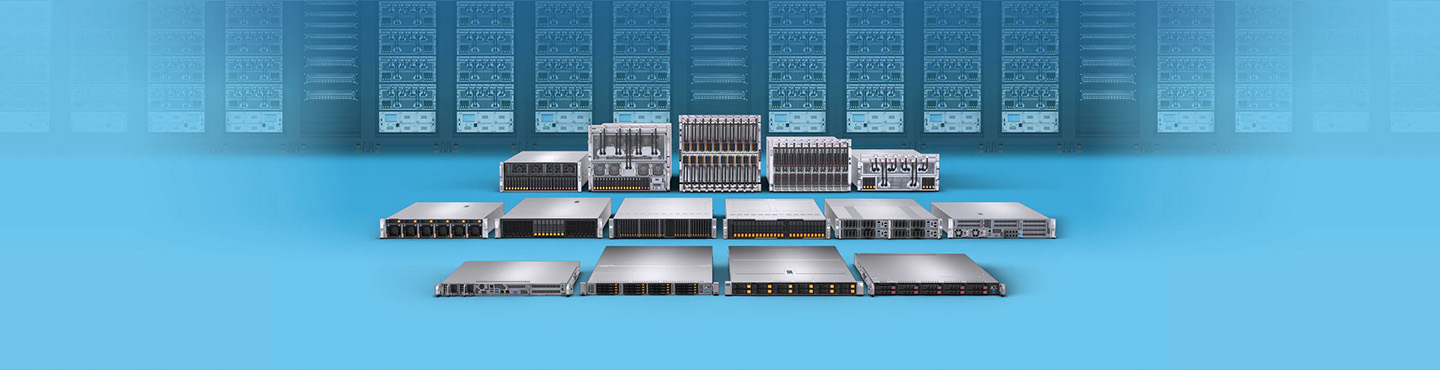

The most important facts about the Supermicro H13 product line for AMD EPYC™ 9004 series server processors

- Powerful 4th generation AMD EPYC processors with up to 128 cores / 256 threads ((up from 64 cores/ 128 threads on the previous generation).

- Significantly more cache: Support for AMD 3D V-Cache™ technology increases the L3 cache up to 1,152 MB. This is a 50% increase per CPU compared to the previous generation AMD EPYC processors with AMD 3D V-Cache technology.

- Faster communication between CPU and other components: through support for PCIe 5.0, which is twice as fast as the previous generation of CPUs with PCIe 4.0.

- Increased addressable memory capacity of up to 6 TB DRAM per socket, thanks to the increased number of memory channels.

- Increased memory access bandwidth of up to 57,600 MT/s (megatransfers per second) per socket, a 125% improvement over the previous generation.

- Faster memory performance: Support for DDR5-4800MHz memory, which is 33% faster than previous generations.

- Faster communication between CPUs: through more and faster xGMI connections.

- AI Optimization: Support for AVX-512- commands increases speed for AI calculations (previously this was only possible with 3rd/4th generation Intel® Xeon® Scalable processors).

- Increased data security: Security features such as "Security by Design" better protect data at all stages of processing.

- Higher energy efficiency: through EPYC 9004 CPUs, which require fewer servers to complete tasks compared to EPYC 7002/7003- CPUs.

- Connectivity to the latest technologies: including 128 PCIe5 lanes in 1-socket servers and up to 160 PCIe5 lanes in 2-socket servers. This enables the increasingly demanded integration and use of accelerators, GPUs, NVMe flash devices, FPGAs and high-performance LAN cards.

Why the H13 systems are particularly well suited for AI and ML

Supermicro's H13 server series is optimally suited for use in artificial intelligence and machine learning. The reason for this lies in the demanding requirements that AI and ML workloads place on the hardware. They require high computational power as they have to work with massive amounts of data and perform complex calculations. The powerful 4th generation AMD EPYC processors with up to 128 cores are ideally suited for this.

In addition, these processors support the AVX-512 instructions. AVX-512 is an extension of the x86 instruction set architecture. Using it was previously only possible with 3rd/4th generation Intel® Xeon® Scalable processors. This extension is specifically designed for high compute performance and parallel processing operations. It helps accelerate AI computations and improves the performance of AI applications.

Equally important is the speed of data processing. AI and ML applications often need access to large amounts of data and must be able to process it efficiently. The H13 series offers a massive memory capacity of up to 6TB of addressable DRAM per socket and supports DDR5-4800MHz memory. This enables faster processing of data, which is especially important for data-intensive AI and ML applications.

In addition, the increased number of PCIe lanes makes it easy to integrate and use high-performance AI accelerators, GPUs, and FPGAs. These hardware accelerators can further increase the computing speed and thus improve the performance of AI and ML applications. With all these features, coupled with the security and energy efficiency benefits of the series, Supermicro's H13 series is an excellent platform for demanding AI and ML applications.

Which products in the line are particularly suitable for AI and ML

H13 4U/8U NVIDIA HGX H100 GPU Systems

This system is the ultimate Octa-GPU system based on HGX H100. It is the product for extreme machine learning needs and the next-generation machine learning platform. Here you get the combined GPU computing power with NVIDIA GPUs, NVIDIA® NVLink® for highest multi GPU inter-process communication, NVIDIA networking and on demand a fully optimized NVIDIA AI and HPC software stack from the NVIDIA NGC™ catalog for highest application performance.

You can find more information here:

https://www.supermicro.com/en/products/system/gpu/4u/sys-421gu-tnxr

H13 4U GPU- Systems for max. 8x Dual PCIe NVIDIA or AMD GPU

The little brother of the 4U/ 8U HGX variant and PCIe bestseller among GPU compute servers is still extremely powerful, but here the focus is even more on versatility and flexibility. For example, you can easily customize the system to meet your accelerator and GPU needs.

You can find more information here:

https://www.supermicro.com/datasheet/datasheet_H13_4UGPU.pdf

Note

Choosing the right server for your use case is a complex matter - especially if you are looking for the right server for your AI or ML applications. Therefore, our Custom Server Solutions team is always available to answer any questions you may have. Together we will find the ideal server for your respective application scenario with the desired performance and/or packing density at the lowest possible power consumption and investment costs!

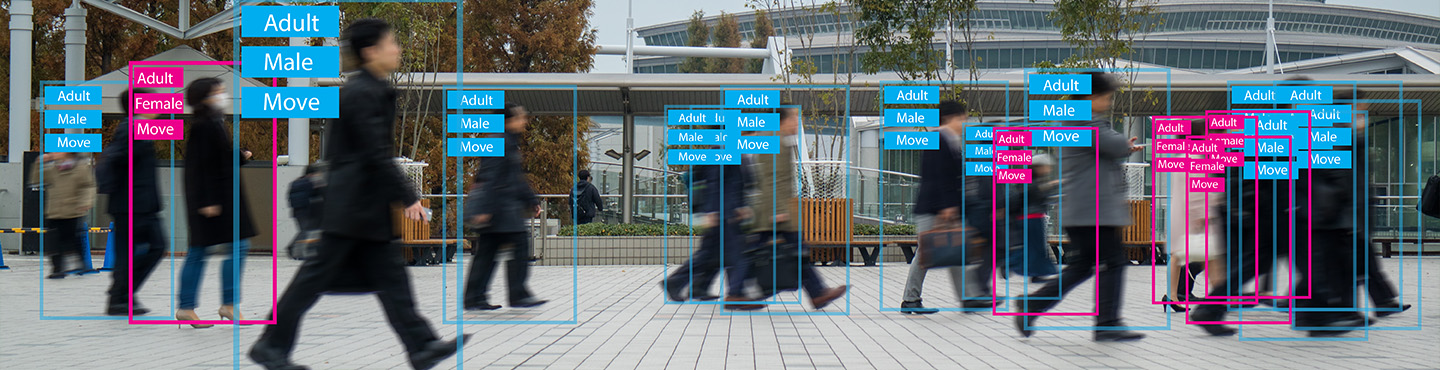

Application scenario: a soccer club uses ML

As an example, let's take a professional soccer club that wants to use image recognition and machine learning to first analyze the training and playing behavior of individual players on the men's team in order to

- Analyze their performance (acceleration, speed, ball contact, etc.),

- predict their performance for the next match day,

- Prevent injuries and

- Design and improve tactics.

How it comes to the large amount of data

For the precise analysis of the smallest motion sequences down to the millisecond range, high-resolution videos with as many frames per second as possible and from different angles are required. This creates an enormous amount of raw data that the ML model needs to deliver good results.

How the H13 product line can help here

At this point, one of the benefits to the association is the high memory capacities of the H13 servers (up to 6TB of addressable DRAM per socket and support for DDR5-4800MHz memory) to process the large amount of image data quickly and efficiently.

In addition, AMD's 4th generation EPYC processors with up to 128 cores enable fast execution of the complex calculations required for image recognition. Support for AVX-512- commands also plays an important role, as it accelerates the performance of AI applications.

The high number of 128 PCIe lanes with already one AMD EPYC CPU is also a great advantage . It allows a high number of hardware accelerators such as GPUs or FPGAs to be easily integrated without falling into the famous "bottleneck trap" of too few PCIe lanes at the same time. These significantly accelerate the processing of ML tasks and increase the performance of the ML model.

We see: For our soccer club, the Supermicro H13 product line is an excellent choice.

Other use cases

In other use cases, such as working with sensitive data (e.g. in medical technology), the security advantages of the H13 are of particular value.

Last but not least, energy efficiency to reduce emissions and costs is an important factor for any organization (from soccer clubs to data centers) today.

Conclusion: increased computing power and exceptional energy efficiency

In summary, Supermicro's H13 product line represents a significant development in the field of high-performance servers. Utilizing the latest technologies - including 4th generation AMD EPYC processors, enhanced L3 cache, support for DDR5-4800MHz memory and the PCIe 5.0 standard - Supermicro offers high-performance solutions ideal for a wide range of applications, including artificial intelligence and machine learning . The perfect and extremely flexible integration options of current GPU technologies based on AMD or NVIDIA also ensure maximum application possibilities for PCIe and HGX platforms. Increased computing power, combined with exceptional energy efficiency and outstanding security features, makes the H13 product line an excellent choice for businesses looking to address their technical challenges while optimizing their cost of ownership.

H13 servers can significantly increase performance and efficiency, both in traditional IT environments and in specialized areas such as AI and ML. Whether it's data-intensive workloads, complex scientific simulations or advanced AI models, Supermicro's H13 product line is designed to meet the challenges of today and tomorrow. With the H13 series, Supermicro continues to expand its position as a leader in high-performance servers.

Choosing the right server for your use case is a complex matter - especially if you are looking for the right server for your AI or ML applications. Therefore, our Custom Server Solutions team is always available to answer your questions. Together we will find the ideal server for your respective application scenario with the desired performance and/or packing density at the lowest possible power consumption and investment costs!

You can also find more information about the H13 product line on Supermicro's website.

-

High-Performance Computing Infrastructure to Support Molecular ResearchMaximilian Jaud | 10 February 2025The system configuration enables highly complex computations to run in parallel while efficiently processing large datasets.Mehr lesen

High-Performance Computing Infrastructure to Support Molecular ResearchMaximilian Jaud | 10 February 2025The system configuration enables highly complex computations to run in parallel while efficiently processing large datasets.Mehr lesen -

High-Performance Virtualization Platform based on ProxmoxMaximilian Jaud | 31 January 2025Redundant high-performance platform capable of virtualizing around 200 servers for diverse applications, featuring fast NVMe storage for active workloads and large HDD storage for long-term archiving.Mehr lesen

High-Performance Virtualization Platform based on ProxmoxMaximilian Jaud | 31 January 2025Redundant high-performance platform capable of virtualizing around 200 servers for diverse applications, featuring fast NVMe storage for active workloads and large HDD storage for long-term archiving.Mehr lesen -

High-Performance and Fail-Safe System for CybersecurityMaximilian Jaud | 16 December 2024To support complex security processes, the customer required a high-performance, scalable IT infrastructure.Mehr lesen

High-Performance and Fail-Safe System for CybersecurityMaximilian Jaud | 16 December 2024To support complex security processes, the customer required a high-performance, scalable IT infrastructure.Mehr lesen -

High-Performance IT Infrastructure to Support ResearchMaximilian Jaud | 29 November 2024High-performance and specialised HPC infrastructure for Forschungszentrum Jülich.Mehr lesen

High-Performance IT Infrastructure to Support ResearchMaximilian Jaud | 29 November 2024High-performance and specialised HPC infrastructure for Forschungszentrum Jülich.Mehr lesen -

Hardware Requirements in the Age of Edge and Cloud ComputingMaximilian Jaud | 28 October 2024Deductions from the McKinsey Technology Trends Outlook 2024 – Part 2Mehr lesen

Hardware Requirements in the Age of Edge and Cloud ComputingMaximilian Jaud | 28 October 2024Deductions from the McKinsey Technology Trends Outlook 2024 – Part 2Mehr lesen -

Effects of Generative AI on Hardware RequirementsMaximilian Jaud | 28 October 2024Deductions from the McKinsey Technology Trends Outlook 2024 – Part 1Mehr lesen

Effects of Generative AI on Hardware RequirementsMaximilian Jaud | 28 October 2024Deductions from the McKinsey Technology Trends Outlook 2024 – Part 1Mehr lesen -

Focus on technologiesMaximilian Jaud | 1 August 2024the relevance of HBM and 3D stackingMehr lesen

Focus on technologiesMaximilian Jaud | 1 August 2024the relevance of HBM and 3D stackingMehr lesen -

Supermicro X14 - Simply explainedMaximilian Jaud | 15 July 2024Supermicro introduces the new X14 server seriesMehr lesen

Supermicro X14 - Simply explainedMaximilian Jaud | 15 July 2024Supermicro introduces the new X14 server seriesMehr lesen -

A Look at Samsung's PM1743 SSDMaximilian Jaud | 15 July 2024Security and Reliability Solutions for Enterprise EnvironmentsMehr lesen

A Look at Samsung's PM1743 SSDMaximilian Jaud | 15 July 2024Security and Reliability Solutions for Enterprise EnvironmentsMehr lesen -

SIE and SED Encryption in KIOXIA SSDsMaximilian Jaud | 15 July 2024KIOXIA has enabled these functions by default, providing all users with enhanced data security.Mehr lesen

SIE and SED Encryption in KIOXIA SSDsMaximilian Jaud | 15 July 2024KIOXIA has enabled these functions by default, providing all users with enhanced data security.Mehr lesen -

PCRAMMaximilian Jaud | 1 December 2023Learn more about the potential of FeRAM as a powerful replacement for EEPROM solutionsMehr lesen

PCRAMMaximilian Jaud | 1 December 2023Learn more about the potential of FeRAM as a powerful replacement for EEPROM solutionsMehr lesen -

ReRAMMaximilian Jaud | 1 December 2023Learn more about the potential of FeRAM as a powerful replacement for EEPROM solutionsMehr lesen

ReRAMMaximilian Jaud | 1 December 2023Learn more about the potential of FeRAM as a powerful replacement for EEPROM solutionsMehr lesen